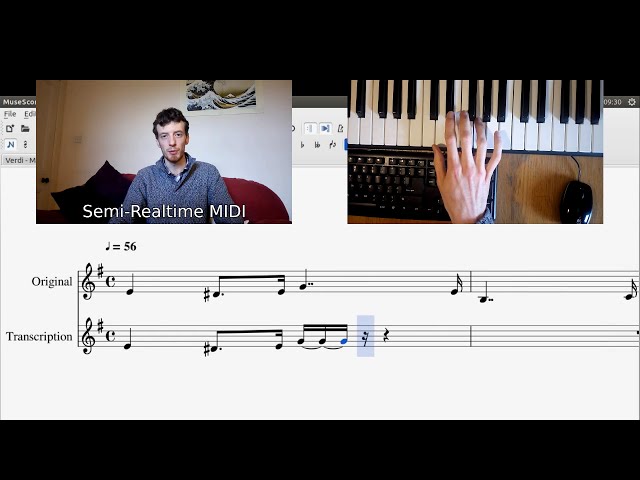

GSoC 2016 - Semi-Realtime MIDI: A new method of note entry for MuseScore

Hi! My name is Peter, and I have been selected to work with MuseScore for this year’s Google Summer of Code. My project is titled “Semi-Realtime MIDI”, and the aim is to create a new mode of note entry for MuseScore - or actually a few different modes - that would basically allow users to enter notes by performing the piece on a MIDI keyboard and having MuseScore translate the performance into the correct musical notation.

You can keep up with my progress at the following locations:

- Weekly blog right here at MuseScore.org or via my own website.

- The actual code and pull requests from my fork on GitHub

- Occasional video updates via my YouTube channel.

First video: Introducing Semi-Realtime MIDI

Project details

The ability to automatically transcribe a live performance is known as “realtime MIDI”, and it is extremely difficult to do accurately due to the imperfections inherent to any human performance - such as slight changes in tempo that may be imperceptible to listeners but that could wreak havoc with the computer algorithm! My idea, which I am calling “semi-realtime MIDI”, places certain limitations on the user that restrict their ability to play freely, but which are vital to ensure accurate notation.

I envision two modes to semi-realtime MIDI, both of which I intend to implement. The modes are “automatic” and “manual”, which refers to whether the position of note input (i.e. the “cursor”) advances automatically at a fixed speed, or manually on the press of a key. In automatic mode an audible metronome would be used to enforce a strict tempo, which would be much slower than the playback tempo to aid accurate MIDI interpretation. In manual mode the metronome clicks are replaced by the user tapping a key with their free hand, or (ideally, if they have one) by tapping the MIDI sustain pedal to allow both hands to be used to play notes. These key/pedal taps can be as frequent or infrequent as desired, and the user could even vary the frequency mid-piece to slow down for difficult sections and speed up for easier sections. Automatic mode is arguably the most similar to traditional realtime MIDI, so it will probably be simply labelled as “Realtime MIDI” in the menu rather than “Automatic Semi-Realtime MIDI”. Manual mode would just be called “Semi-realtime MIDI”. But suggestions for alternative names are welcome - perhaps “metronome mode” and “foot-tapping mode” would be more descriptive titles! ;)

Of course, MuseScore already allows you to enter notes with a MIDI keyboard via the existing “step-time” method, which you are probably quite familiar with. To enter notes in step-time mode, you must first select the note duration from the toolbar before pressing the key to select the pitch. This method is extremely reliable - you are guaranteed to get what you want (or at least what you asked for!). However, this method can be painstakingly slow at times, especially if the passage of notes you are entering has a complicated rhythm with lots of different note lengths. Musicians are not used to having to “choose” a note length before it is played - normally they just sustain the note for the required duration - so a realtime or semi-realtime approach should actually be more natural from a musician’s point of view. Adjusting the note length based on the length of time for which the key is held removes the need for the user to constantly swap between the MIDI keyboard and the computer mouse/keyboard, so the new method of note entry should be much faster too!

I’m extremely grateful to MuseScore and Google for providing this opportunity, and I am very much looking forward to working with Nicolas (lasconic) and the rest of the community over the coming weeks and months!

Comments

This is what I call jaw-dropping awesomeness. Way to go, Peter!

Something that caught my attention in the video mockup was the way the notes began as multiple short durations adding up tied to each other, and then concatenated into single notes (sometimes dotted) of longer durations. If you can implement this, the same framework could solve a related problem, illustrated by a time signature change that leads to notes getting split up and tied across barlines—if you then reverse (not undo) the time signature you're stuck with the ties. There's currently no way to automatically merge the notes back together.

In reply to This is what I call by Isaac Weiss

Thanks very much!

My method is basically to record lots of small notes tied together and, once the key is released (or at the end of a measure) export as a MIDI file and then re-import so that the tied notes are combined into one. Of course, the whole export-import business would be seamless and hidden from the user.

You are correct—a similar method could be used on a time signature change or copy-paste to combine notes that had been tied across a barline—but it would also result in all non-pitch information being lost, like text, dynamics, articulations and layout adjustments (at least that would be the case for the basic implementation I had in mind). I guess it might be possible to do the export-import just for the two tied notes, it's worth investigating. It might also be useful to have a feature where you can select a group of notes and have them completely re-rendered based on only their pitch and timing information (i.e. export a selection as MIDI and re-import it into the same score), kind of like automatic beaming or the "respell pitches" feature, but for rhythms. Again though, it's difficult to see how text and symbols could be preserved with such a feature.

In reply to Thanks very much! My method by shoogle

Ah, I see. Never mind then. Your approach makes more sense than implementing something from scratch to combine the tied notes.

In reply to Thanks very much! My method by shoogle

"or at the end of a measure"

I'm sure you've thought about pros and cons of measure vs after the key is released.

I vote for at the end of a measure. Considering that there won't be any tuplets across barline and that sustained notes will have to be slurred across barlines, therefore that provides fixed boundary conditions. A measure seems to the most practical smallest unit of time for analyzing midi. Especially when considering complexities of things like multi-voices.

This is great news, indeed! :-) I was close to start hacking something myself (the current note entry mode is so annoying to work with) but I knew I would not have the time to finish it. So I love seeing someone doing it properly – great!

OK, so the problem of segmenting "raw" user input into correct note lengths is a really tough one and I think your approach is a very good one: conceptually convincing and reliable yet flexible. The general problem, on the other hand, is a perfect use case for advanced machine learning techniques (most notably inference methods), which is my area of research. However, machine learners (like me) are lazy people and love clean interfaces for applying their algorithms – this is what kept me from implementing something myself.

Now, here is my point: It might be relatively easy for you to factor your code such that there IS a clean interface where machine learning people can plug in their algorithms. So, if it was possible to create such an interface as a byproduct of your work, MuseScore might end up with not only getting a really nice note entry mode (or actually two) but also becomming a playground for musically inclined machine learners. This might promote even more convenient and/or sophisticated note entry modes in the future and is actually a feature non-open-source alternatives can hardly come up with.

I'll promise to provide at least one more note entry mode myself ;-)

In reply to This is great news, indeed! by Robert Lieck

Machine learning is something I am interested in too, though I don't currently have much experience in the area. My intention is actually to use/adapt the existing MIDI import code to deal with things like voice separation, rhythm-simplification, etc. In terms of a clean interface, are you just referring to standard clean-code practices or was there something specific you had in mind?

If machine learning is something you have some experience in then perhaps you would be interested in the OMR effort? MuseScore's developers made an attempt at OMR (Optical Music Recognition) a few years ago but it was abandoned. However, it looks like there might be another attempt soon. Edit: Also see this page.

In reply to Machine learning is something by shoogle

Sorry for the late reply.

Trying to stay practical, I think the best would be an interface that lets you specify a Python file, where the user can implement anything they want as kind of a plug-in. Using Python makes it flexible (no compiling) and accessible to a wider audience. By "clean interface" I was refering to the representation/data type the data are passed to/from the plug-in. It would already be highly beneficial if this was just a MIDI-like in and output that allowed some intermediate processing of the data. Ideally, this would be a broken-down representation such as an array with (track, channel, pitch, onset, offset, velocity)-tuples, which would be similar to what this Matlab lib provides: http://kenschutte.com/midi. This should be easy to convert back to MIDI in order to use MuseScore's existing MIDI import function.

A second step would be to allow for interactive processing, which would introduce a new dimension with a lot of really interesting methods/applications. The question here is what user interface is best suited. Ideally, the user should be able to correct details of the intermediate result, which in turn allows for a better inference in the next iteration. I'd be happy to discuss this in more detail but I think it is out of scope for now.

However, a very simple plug-in mechanism with user-provided Python scips and a broken-down MIDI-like representation would already be a huge enrichment for MuseScore!

In reply to Machine learning is something by shoogle

@Robert, that doesn't sound likely to happen I'm afraid. MuseScore already has a Qt-based plugin interface so I don't think the developers would really want to bundle a Python interpreter too. I doubt that using Python would really generate significantly more interest in plugin development than using Qt, at least as far as note entry in concerned, and it would be more restrictive in terms of what you would be able to do with it. In any case, real-time input is a valuable feature for any notation program, so I think MuseScore's developers would prefer to have the analysis done internally rather than relying on a plugin.

The best I could realistically offer would be to print the MIDI information on STDOUT or into a file which you can parse with another program, but this is already possible using audio servers like ALSA so there is no point in adding it to MuseScore, especially since you need ALSA installed to be able to use MIDI in MuseScore anyway.

Ultimately, MuseScore can already import and export MIDI files, and you can find parsers for MIDI in every pretty much every programming language out there.

In reply to @Robert, that doesn't sound by shoogle

I agree that the JavaScript/QtScript engine based plugin interface is a nice solution for general purpose plugins and would even allow to run a python scrpt (or basically anything) on the score. Two things that are not so nice about it, when it comes to note entry, are data representation and workflow. The MIDI data contains more information than what ends up in the score and it contains the genuine raw data. This information is relevant and should not get lost. And in terms of workflow, it is impractical to click my way through various applications/menues to get a couple of bars MIDI input into my score.

Printing the MIDI information to a file would preserve that information and smoothen the workflow a lot since it relieves the user of switching the application. The user may then run a plugin on the file to process the data and incorporate the results into the score. If one could additionally specify a plugin that is automatically run after note entry is finished this would even eliminate this last step and, to me, would basically be as good as what I initially suggested.

While the MIDI representation is somewhat cumbersome when it comes to feeding it to a machine learning algorithm, I agree that this is a minor hurdle. However, it would be great if the MIDI beat clock was synchronized to the metronome played during input.

Concerning the question of internal versus plugin-based processing, the problem is that doing all that in an "intelligent way" is an unsolved problem. So the best one could do is to incorporate a relatively simple but reliable method—which is just what you are about to do. Plugins, on the other hand, allow the (scientific) community to play around with more fancy but unreliable methods. Once one of these is stable it can be incorporated, too.

In reply to @Robert, that doesn't sound by shoogle

This project will actually form the dissertation for my masters degree in Computer Science, so I am actually kind of a member of the scientific community. I am using an internal representation that I think is very intuitive so I would encourage you to make a fork of MuseScore and do your development within MuseScore itself, after the project is over and the code has been added of course. MuseScore is written in C++/Qt which, like Phython, is an object oriented language. C++ is considered more difficult than Python because it is a lower level language, but that doesn't affect you if you are just implementing a mathematical algorithm. There are examples you can follow within MuseScore's code already, and there is plenty of documentation to get you set up if you've never programmed in C++/Qt before. Don't hesitate to ask in the forum or on IRC if you need more help.

In reply to This project will actually by shoogle

Yes, maybe I could build on your work to implement a machine learning interface. Having several years of experience working with C++/Qt the language/framwork is not the problem. It's more that the overhead of working one's way through the guts of a new project for several hours or days until you get to the point where you can actually start implementing your thing is hard to afford if it's just about testing a small ML algorithm (especially since everyone has to do it anew). I'll have a look at your code once it is upstream, sounds like a good basis for what I'm aiming at.

And irrespective of the machine learning stuff, I'm really looking forward to using your input methods! It's going to be a huge improvement in practice! Thanks a lot for that! :-)

I love the idea here! I just have one question... will there be some sort of filter for subdivisions, so we can choose how precise we want to be? Like some sort of Grid UI where you can select/deselect minimums and/ or types of notes?

(Like smallest duration would be quarter not in maybe, a relatively fast song that only uses quarter notes and half notes. And for a different type of song sixteenth notes would be the minimum subdivision like the one you used in the video. Also, the ability to toggle on/off triplets or grace notes and such would be nice, but I could see if it were a little to much to do in this amount of time)

I didn't know if you were or weren't planning to do something like this anyways, but I was just thinking how it could also save a lot of time if the musician didn't have to always play with that much precision. From personal experience, while sight-reading, I sometimes don't hold my notes out to full value to (sort of) prepare for some jumps. I'm less experienced, so knowing a quarter note will still be written while I prepare my hand for something else would be nice, I the part I'm inputting only uses quarter notes and higher. (So I can save time while arranging, playing faster without having to worry about holding my notes to full value).

If you were going to add something like this, great, and if not, I think it'd be a really helpful once you do the main part of what you're doing. I really like your idea, though. It's exactly the feature I was looking for!

In reply to I love the idea here! I just by speedmeteor101

Thanks for the feedback!

The user will be able to select the smallest duration - it will probably just be whichever note-length is currently selected in the toolbar.

I have given some thought to the issue of precision and I have decided that my first attempt will require the note to be held for it's full duration (because that is easiest to code for) and then I will use my remaining time on the project to investigate ways to improve it, including relaxing the precision requirement. It should be possible to create an algorithm that assumes the start of the note was exact, but which allows you to be "lazy" with when you release the note. If you have ever imported a MIDI file with MuseScore then you will know that the MIDI import algorithm does something like this already, and it is my intention to borrow this code.

In reply to Thanks for the feedback! The by shoogle

Why not simply bring up the MIDI import panel after real-time note entry, and allow the user to re-quantize as desired?

In reply to Why not simply bring up the by Isaac Weiss

The MIDI import panel has many options that are not relevant to note entry, but a simplified version might work and could maybe even enable the semi-realtime method to handle triplets.

In reply to The MIDI import panel has by shoogle

Wow! I'm glad you have plans to look at it of you have the time. And I also really like the simplified midi panel as a way to turn on triplets (and grace notes with the slash?)

The ways of doing this that you've come up with seem great and it'd be great if you had time for more. Best of luck to you!

In reply to Wow! I'm glad you have plans by speedmeteor101

Thanks! I think grace notes are less common and more difficult to implement than triplets, so it is less likely that I will manage to implement them in the time available, but you never know!

Thank you Peter! I downloaded a nightly build to try it out, and found this a remarkably intuitive and simple input method. I was using it to transcribe accordion music, and very quickly input a few pages doing one hand at a time. (Even if I had a foot pedal, one hand at a time is necessary to keep the accordion bass and treble separate because their range overlaps.) It was nearly my first time using MuseScore. There was a minor hiccup on the last measure--it is necessary to manually tie the notes together (I had filled-in exactly the correct number of measures so couldn't just move to the next one), but that was not hard to correct and otherwise went perfectly. I was then using the midi file with Synthesia or PianoBooster to test whether I could play from memory perfectly. (Just to complete the thought, I'll mention that generating accordion-style sheet music would require some additional tweaking because chords are written in shorthand (rather like guitar chords or tabs) and the base notes are not voiced by the instrument exactly as they are written, and the stems point up or down depending on whether the note is a base note or a chord.) Thanks!!